The methods for achieving inter-process/thread communication include:

- Inter-process communication methods: file mapping, shared memory, anonymous pipes, named pipes, message slots, clipboard, dynamic data exchange, object linking and embedding, dynamic link libraries, remote procedure calls, etc.

- Thread synchronization methods: events, critical sections, mutexes, semaphores.

Actually, methods like pipes and shared memory can achieve inter-process synchronization. For example, using pipes is very convenient:

1 | // Create a named pipe |

Then, the following code implements a simple inter-process communication functionality:

1 | // writefifo.cc |

The operation is shown as follows:

Now let’s describe the requirements for implementing inter-process synchronization from the operating system’s perspective.

Implementing Inter Process Communication (IPC) involves three issues:

- How one process passes information to another process.

- Ensuring that two or more processes do not overlap during critical activities. For example, in an airplane ticket booking system, two different processes compete for the last seat for different customers.

- Correct sequencing; for instance, if process A generates data that process B needs, then process B must wait (block) until A finishes generating the data.

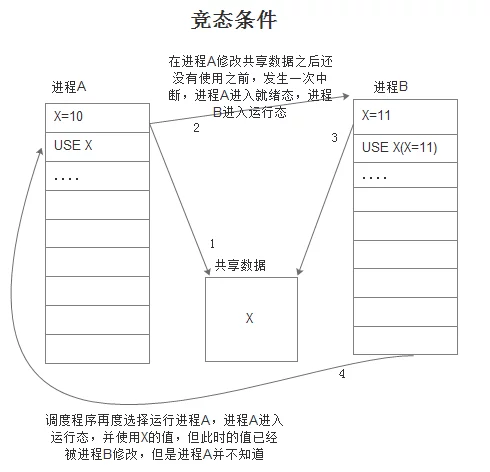

Race Conditions

In some operating systems, cooperating processes may share common storage areas that both can read and write.

When two or more processes read and write certain shared data, the final result depends on the precise timing of the processes, which is known as a race condition.

For example:

A variable X is shared between process A and process B. It is possible that process A assigns a value to X, but at that moment, the operating system’s scheduler interrupts, process A enters the ready state, and process B enters the running state. It then chooses to run process B, which also assigns a variable to X, using X until the scheduler selects process A again. When the scheduler runs process A again, A does not know that X has already been modified by process B, leading to undefined errors.The reason is that process B operated on X before process A finished using it, resulting in incorrect outcomes, which exemplifies a race condition.

How to Avoid Race Conditions?

In fact, whenever there is shared memory, shared files, or sharing of any resources, there’s a potential for race conditions. The key to avoiding race conditions is to find a way to prevent multiple processes from concurrently reading and writing shared data.

What we need is mutual exclusion, which means ensuring that when one process is using shared data, other processes cannot perform the same operation concurrently.

A part of a process’s time may perform internal calculations or other operations that do not cause race conditions. Some processes may need to access shared memory or shared files or execute actions that could lead to race conditions.

Accessing shared memory operations is referred to as a critical section.

As mentioned above, the cause of a race condition is two processes being in the critical section at the same time, so to prevent race conditions, we must appropriately arrange conditions such that two processes cannot be in the critical section simultaneously.

The four conditions for effectively avoiding processes being in the critical section at the same time are:

- No two processes should be in their critical sections simultaneously.

- No assumptions should be made about CPU speed or the number of CPUs.

- Processes running outside the critical section must not block other processes.

- No process should have to wait indefinitely to enter the critical section.

Several Solutions for Mutual Exclusion in Critical Sections

Single-Processor Systems

In single-processor systems, the simplest way to implement mutual exclusion is to disable all interrupts immediately after a process enters the critical section and re-enable interrupts before leaving the critical section. This ensures that while the currently executing process completes its critical section, other processes cannot be scheduled.

However, giving user processes the authority to disable interrupts is a very bad choice. If a user process disables interrupts and forgets to re-enable them, it could result in system termination (in single-processor systems).

In multi-processor operating systems, however, disabling interrupts only affects the CPU executing the command, while other CPUs continue to run, meaning processes running on them can also enter the critical section.

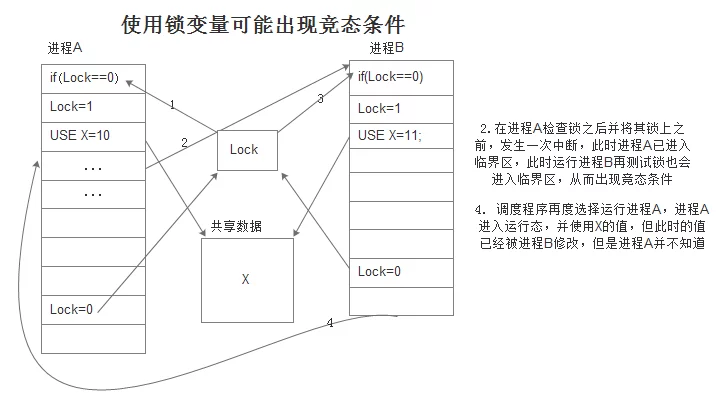

Lock Variables

Another software solution to prevent two processes from entering the critical section simultaneously is to set a shared variable—a lock variable—initialized to 0. When a process wants to enter the critical section, it first checks if this lock is set (to 1). If the lock’s initial value is 0, the process sets it to 1 and enters the critical section. If the lock’s value is already 1, then that process waits until the lock value changes to 0 before entering the critical section.

Note: This solution seems good, but it can also lead to race conditions.

As shown in the following illustration:

Strict Alternation Method

Define a variable turn initialized to 0 to indicate which process is allowed to enter the critical section, for checking or updating shared memory. Initially, process 0 checks turn and finds its value to be 0, allowing it to enter the critical section. Process 1 also sees its value as 0 and enters a loop to repeatedly check turn for when it changes to 1.

Repeatedly testing a variable until a certain value occurs is termed busy waiting. This approach is very wasteful of CPU time and should generally be avoided.

1 | // Process 0 |

1 | // Process 1 |

Busy waiting should only be used in situations where it is reasonable to assume the wait will be very brief.

Locks used for busy waiting are known as spin locks.

However, this solution also has several issues:

- If process 0 enters the critical section quickly exits and sets

turnto 1, at this time both processes 0 and 1 are executing outside the critical section. If process 0 then abruptly resumes its loop from the start, it cannot enter the critical section (becauseturnhas been modified to 1 by process 0). Meanwhile, process 1 is doing non-critical-region work, and does not enter the critical section, causingturnto remain 1. Thus, process 0 will keep looping until process 1 setsturnback to 0 (when it enters and exits the critical section). - This demonstrates that in cases where one process is significantly slower than another, taking turns in the critical section is not a good strategy.

- These issues lead to the consequence that process 0 gets blocked by the process running outside the critical section.

Though using strict alternation can avoid all race conditions, it violates the third condition of the good practices for preventing simultaneous access to the critical section.