To do a good job, one must first sharpen their tools! AIGC tools represented by ChatGPT and Stable Diffusion have emerged, and the AI wave is sweeping across various industries. As developers, how to leverage AI capabilities to improve efficiency in tasks is a question worth studying.

It is crucial to note that the content generated by GPT may not be entirely accurate and can contain inaccuracies. However, its potential lies in the possibility of achieving a general-purpose AI assistant. No one can possess expert-level knowledge in all fields, but effectively utilizing GPT in unfamiliar domains can significantly lower the entry costs.

In this article, I will introduce some cases of using GPT to assist in work, life, and learning, as well as explore application scenarios for secondary development using the GPT API. At the end of the article, I will share some Prompts I use and recommend some useful GPT tools, as well as a solution for encapsulating Azure OpenAI services using Cloudflare Worker.

API Request

You can apply for an OpenAI account to get a Key, but its free quota has a time limit, and recently the risk control is strict, making it easy to get banned.

It is recommended to apply for Azure-hosted OpenAI services, which are stable enough and available domestically. However, be aware of the differences in API calls between OpenAI and Azure. At the end of the article, I will provide a solution for Azure OpenAI services based on Cloudflare Worker.

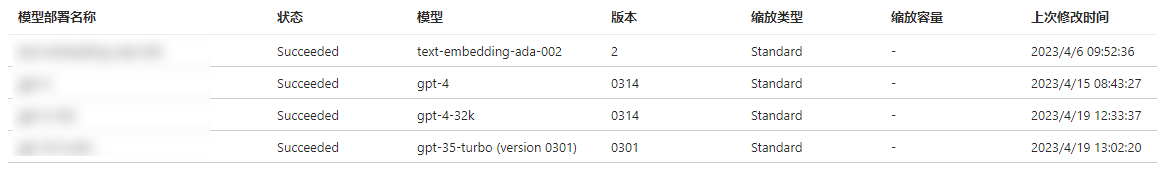

Azure allows you to deploy models as needed:

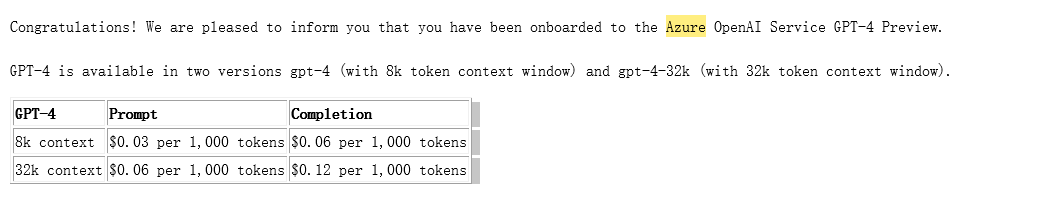

Also, I have been granted access to try GPT-4 on Azure, which allows the use of the GPT-4 and GPT-4-32K models, which are more powerful (and also more expensive) than GPT-3.5.

Available GPT models include:

- gpt-3.5-turbo

- gpt-4

- gpt-4-32k

This article will still use the official OpenAI API as an example. When you want to call the GPT API, you need to POST an HTTP request:

1 | curl --location 'https://api.openai.com/v1/chat/completions' \ |

In messages, you can specify the role and content of the message. GPT offers three types of roles: system, user, and assistant.

- The content of

systemis a preset role for GPT, such as “You are a professional C++ developer. Please evaluate whether my C++ code adheres to the C++11 standard or above, and respond in Chinese.” This can be understood as the information to establish a persona for GPT. - The

userpart is the information that the user wants to provide to GPT, such as the content of a question. assistantis the information that GPT replies with.

Using the above POST request as an example, GPT will respond with a JSON structure:

1 | { |

By parsing this structure, you can obtain the reply content from GPT.

Note: When calling GPT’s API, you need to manage the context of the conversation yourself. This means that every time you chat, you have to include the complete conversation information in

messages. When the number of chat rounds increases, it will consume more tokens, so you should control the amount of context prudently.

Daily Usage

Currently, I frequently use GPT to handle tasks such as:

- Translation, checking grammar errors, explaining words

- Explaining, checking, optimizing given code

- Optimizing description wording

- Summarizing documents

- Providing viewpoints Q/A

- What Is It, letting GPT identify what the content might be and generating a regular expression

- Letting GPT assume a role to answer questions

- Generating topic outlines

Let’s illustrate a few examples.

Generating Code

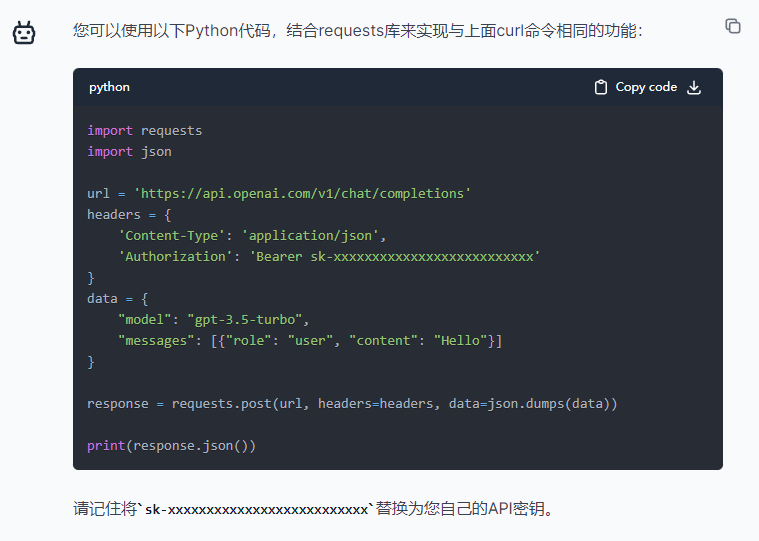

- Sometimes, I need to write Python scripts, but there are too many libraries. In such cases, I can describe the requirements and have GPT generate code for reference.

Using the example of the above POST API implementation, the question:

1 | Convert the following curl command to Python code using the requests library: curl --location 'https://api.openai.com/v1/chat/completions' \ --header 'Content-Type: application/json' \ --header 'Authorization: Bearer sk-xxxxxxxxxxxxxxxxxxxxxxxxxx' \ --data '{ "model": "gpt-3.5-turbo", "messages": [{"role": "user", "content": "Hello"}] }' |

And GPT’s response:

Within seconds, it produced the output.

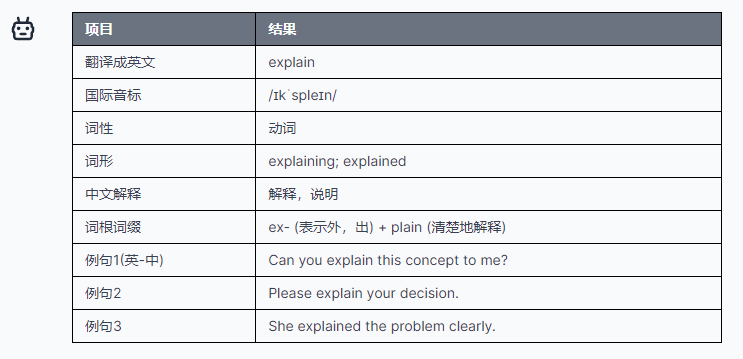

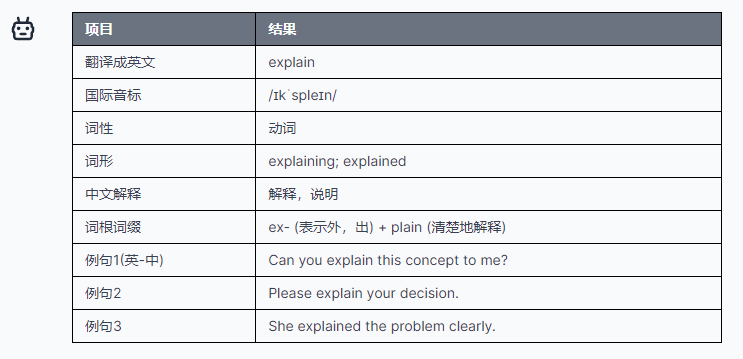

Explaining Words

You can also let GPT explain a word and output it in tabular form:

1 | Please return the following about Text in a table: |

What is it

Give GPT a piece of content, let it recognize what it is, and provide a regular expression for the content.

1 | Analyze what the input content might be. Reply in three parts: Maybe, Desc, Regex. Maybe should indicate what it is most likely to be (require accuracy), Desc should provide a description of this thing, and Regex should provide the retrieval regular expression. Input content: https://imzlp.com/posts/96336/ |

GPT-4’s response:

Maybe: Blog article link

Desc: This is a link to a blog post on imzlp.com, and the article ID is likely 96336.

Regex:https?:\/\/imzlp\.com\/posts\/\d+\/

Checking Code Errors

Give GPT a piece of code and let it analyze whether there are any issues; here’s an example code with an out-of-bounds array access:

1 | int iArry[10] = {0}; |

The prompt fed to GPT-4 was:

1 | Go line-by-line and do a detailed inspection of my code looking for bugs. If you see a bug, identify it. Explain what the bug is and provide a fix. |

GPT’s output:

Problem 1: Array Out-of-Bounds

Problem Description

In the given loop, the number of iterations should be less than the length of the array. The current condition index <= 10 will access the 11th element of the array, causing an out-of-bounds error.

Solution

Change the loop condition to index < 10:

1 | int iArry[10] = {0}; |

Problem 2: Poor Output Format

Problem Description

The current printf call does not include a newline character, which causes all output integers to display on the same line, making the output hard to read.

Solution

Add a newline character \n to the printf function:

1 | int iArry[10] = {0}; |

Result

The revised code after fixing:

1 | int iArry[10] = {0}; |

The results are truly amazing.

Development Exploration

Natural Language Parsing of Compile Errors

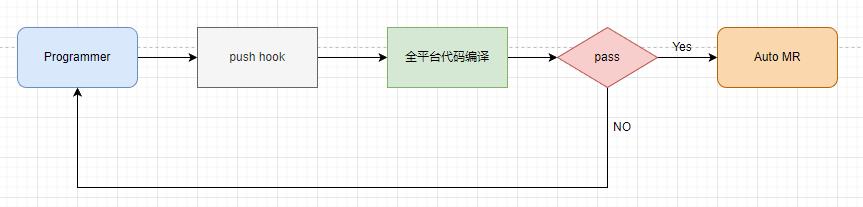

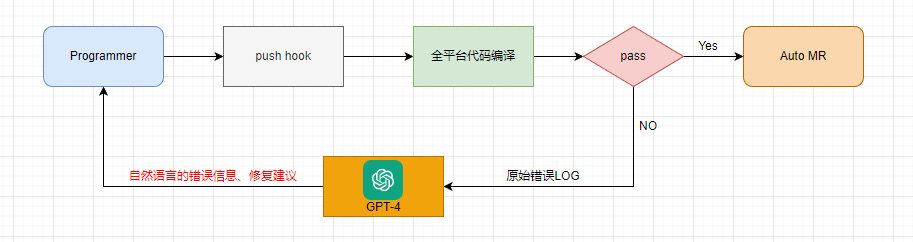

During development, a common issue is handling cross-platform errors in code.

Usually, when code is submitted, the CI triggers an automatic compilation. If problems occur, it throws errors, and the submitter needs to check the compilation log on the CI platform before addressing the issues.

I think this process can be empowered by AI.

AI parses the error log and generates a natural language description and solution.

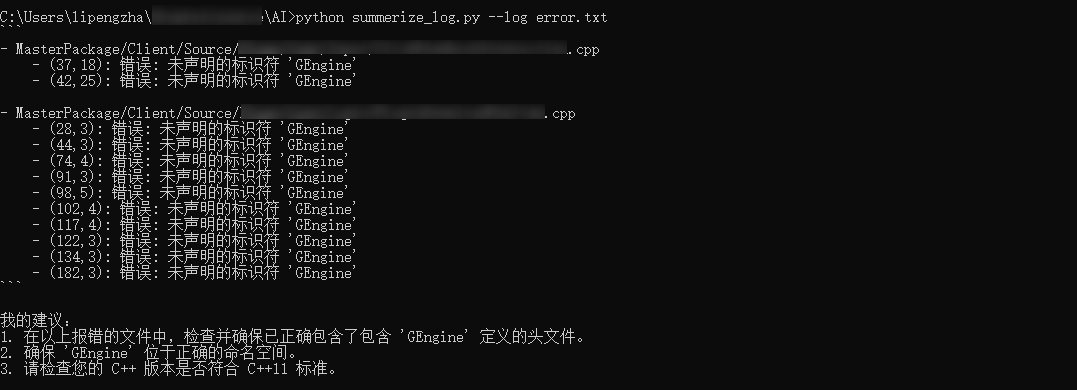

First, the regular expression to detect compilation error logs: \((\d+)\):\s+error. It can detect texts like (459): error, which can help identify all errors in the compiler log file.

1 | HotPatcherRuntime\Private\FlibPakHelper.cpp(459): error C2440: 'initializing': cannot convert from 'FSharedPakReader' to 'FArchive *' |

Then, feeding it to GPT-4 provides a description of the error log:

1 | Error: |

Automate this process with a script:

1 | python summerize_log.py --log D:\Client\Compiler.log |

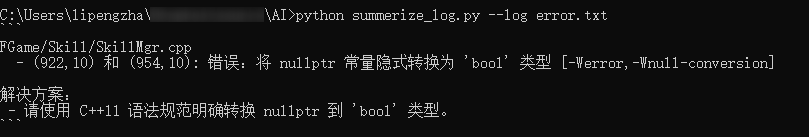

Providing English error logs, it can still respond in Chinese:

1 | D:/Client/Source/GWorld/Skill/SkillMgr.cpp(922,10): error: implicit conversion of nullptr constant to 'bool' [-Werror,-Wnull-conversion] |

Integrating this into the CI process would automatically organize error information and send it to the corresponding developer, saving time spent checking logs.

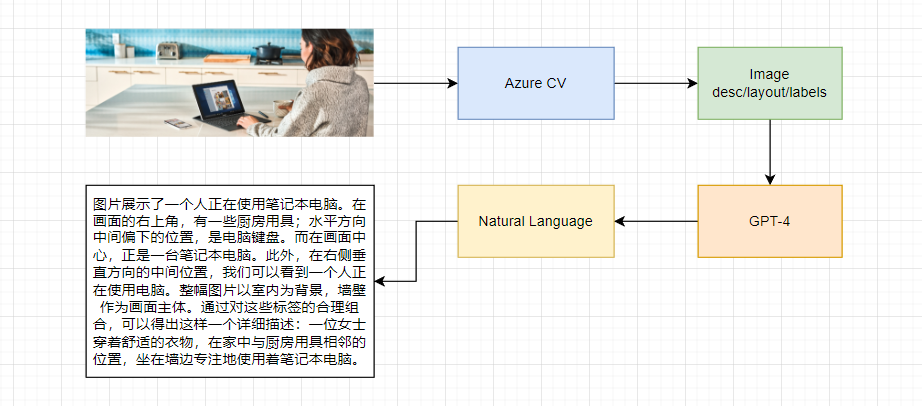

AzureCV Combined with GPT

Currently, GPT does not support multimodal modes (maybe it will in the future), such as sending images to GPT for content analysis.

However, at this stage, Azure CV’s image detection can be utilized to indirectly achieve this. After Azure CV recognizes the image information, we can feed it to GPT to generate a natural language description.

The main process is as follows:

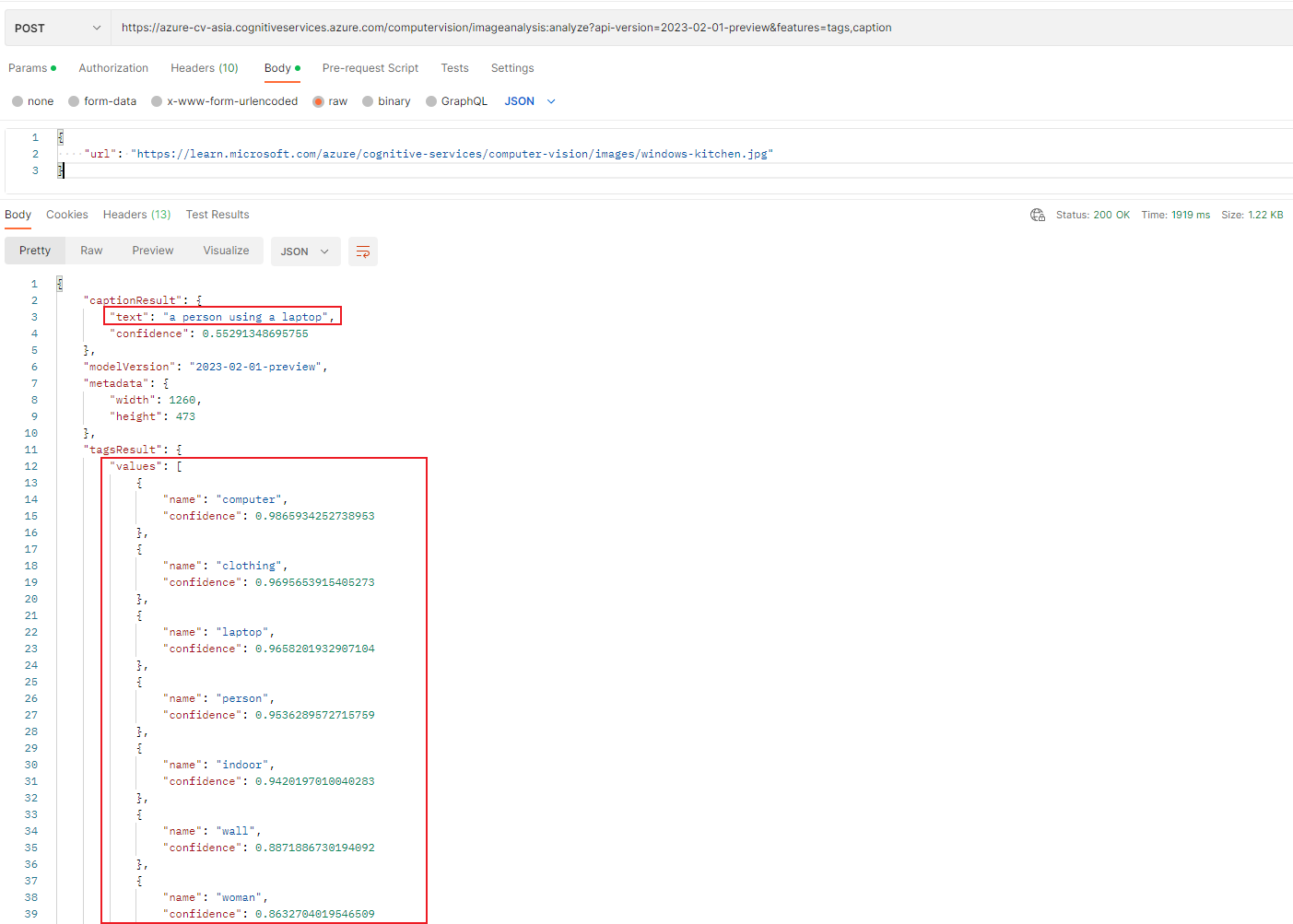

Azure CV’s visual API can obtain information in images. The latest model version is Image Analysis 4.0 Analyze API (preview).

1 | curl --location 'https://azure-cv-asia.cognitiveservices.azure.com/computervision/imageanalysis:analyze?features=read%2Ccaption%2Ccaption%2Cobjects%2Ctags%2Cpeople&api-version=2023-02-01-preview' \ |

By controlling the features attributes, you can get information from different categories:

| URL parameter | Value | Description |

|---|---|---|

features |

Read |

Reads the visible text in the image and outputs it as structured JSON data. |

features |

Caption |

Describes the image content with a complete sentence in supported languages. |

features |

DenseCaption |

Generates detailed captions for individual regions in the image. |

features |

SmartCrops |

Finds the rectangle coordinates that would crop the image to a desired aspect ratio while preserving the area of interest. |

features |

Objects |

Detects various objects within an image, including the approximate location. The Objects argument is only available in English. |

features |

Tags |

Tags the image with a detailed list of words related to the image content. |

By analyzing the results of the POST request, you can obtain image descriptions and elements within the scene (tags and locations):

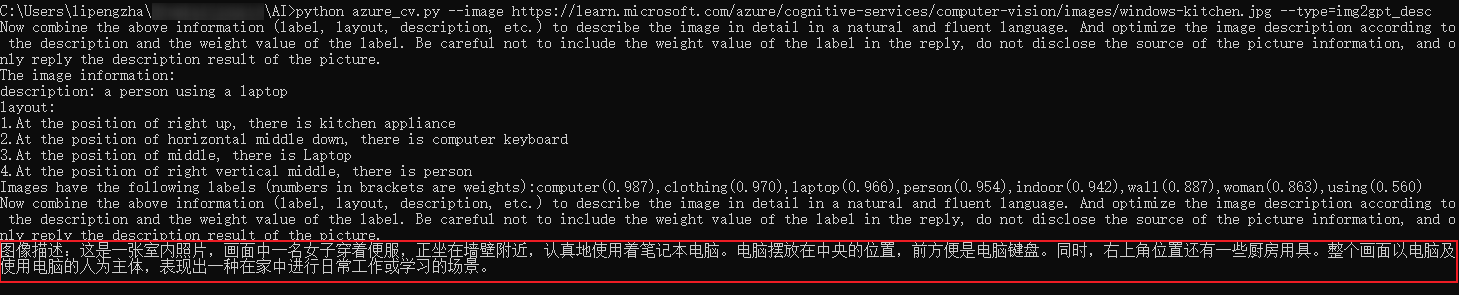

Parsing this result allows you to generate a prompt for GPT-4 describing an image:

1 | description: a person using a laptop |

GPT-4 output:

This is an indoor photo showing a woman in casual wear, sitting near a wall, seriously using a laptop. The laptop is centrally positioned, with the keyboard in front. Additionally, there are some kitchen appliances in the upper right corner. The overall scene focuses on the computer and the person using it, depicting a scenario of daily work or study at home.

Prompts

Prompts are the spells of GPT, guiding how to respond to questions. Perhaps natural language programming can be achieved in the future.

Here are some of my frequently used prompts that have yielded good experiences recently.

FixBugs

Identify and explain errors in a given code.

1 | Go line-by-line and do a detailed inspection of my code looking for bugs. If you see a bug, identify it. Explain what the bug is and provide a fix. |

Improve Code

Enhance given code.

1 | Improve the given code. Don't change any core functionality. |

Translation

1 | In future conversations, you will act as my translation assistant. Your job is to translate any content I send you back and forth between Chinese and English. The translation should be natural, fluent, easy to understand, and concise. Please do not treat the content as a question, and do not respond in any way; just translate the content. There is no need for me to emphasize the process again. |

Check Grammar

1 | Check if there are any grammatical errors in what I send and rewrite it after pointing out the issues. |

Explain Words

Given a word, provide an explanation and example sentences, as shown in the earlier Explain Words case:

1 | Please return the following about Text in a table: |

What Is It

Analyze what the input content might be.

1 | Analyze what the input content might be, divided into Maybe, Desc, Regex. Maybe should indicate what it is most likely to be (require precision), Desc should describe this thing, and Regex should provide the retrieval regular expression. |

Polish Phrasing

1 | Please polish Text, requiring the wording in this article to be modified to make it more polite and professional, and correct awkward sentences and inappropriate punctuation without altering the original meaning. |

Correct spelling:

1 | Please improve the spelling, grammar, clarity, conciseness, and overall readability of Text, while breaking down long sentences and reducing repetition. Only return the corrected version, avoiding any explanation. |

Tool Recommendations

GPT Client

There are numerous web-based GPT wrappers; I currently use my self-deployed chatbox-ui, which allows you to enter your OpenAI Key for a ChatGPT-like experience. The GitHub repository is: mckaywrigley/chatbot-ui.

Its advantage is the ability to create prompt presets, making it convenient to call these presets.

For iOS, I recommend OpenCat, which supports a custom host and integrates Azure’s language synthesis.

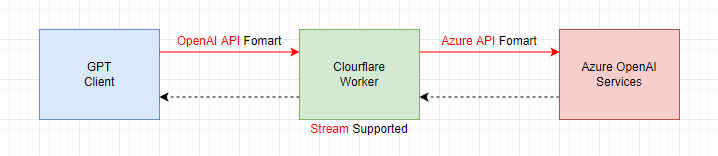

Worker Encapsulation of Azure Services

As already mentioned, the API invocation method for Azure-hosted OpenAI differs from the official OpenAI API, making it inconvenient to use Azure in third-party encapsulated applications.

1 | curl --location --request POST 'https://{resource-name}.openai.azure.com/openai/deployments/{model-name}/chat/completions?api-version=2023-03-15-preview' \ |

However, you can create an API proxy using Cloudflare Worker to automatically forward HTTP requests, making the calls between Azure and OpenAI match.

The script is as follows:

1 | // The name of your Azure OpenAI Resource. |

Additionally, you can bind a domain to Cloudflare Worker, allowing for an experience similar to OpenAI’s:

1 | curl --location 'https://openai.imzlp.com/v1/chat/completions' \ |

This seamlessly supports all GPT clients that allow modifying the API Host, as well as streaming messages, making it very convenient.

Translate EPUB eBooks

You can use bilingual_book_maker.

Use the following command to translate:

1 | python make_book.py --book_name test_books/animal_farm.epub --openai_key xxxxxxxxxxxxxx --api_base https://api.openai.com/v1 --language zh-hans --model openai --model_list gpt-4o-mini |

If it fails midway, you can continue with the --resume parameter:

1 | python make_book.py --book_name test_books/8020.epub --openai_key sk-xxxxxxx --api_base https://api.openai.com/v1 --language zh-hans --model openai --model_list gpt-4o-mini --resume |